Working with CUBE Cluster

Implementing CUBE Cluster should generally be performed after model development and calibration/validation. When you are satisfied with the results of your model or model process when running on a single processing node then implement CUBE Cluster to distribute sub-process of your model and fine-tune the distribution process to achieve the optimal or satisfactory run time reduction.

The DISTRIBUTE statement in CUBE Cluster globally controls the DP options:

DISTRIBUTEINTRASTEP=[T/F]MULTISTEP=[T/F]

The default is T (true) for both types of DP (INTRASTEP=T, MULTISTEP=T) when a CUBE Voyager run is started. If turned off, distributed processing will not be invoked even if there are DistributeINTRASTEP and DistributeMULTISTEP statements in the following script.

Subsequent sections discuss:

File and folder permissions in a distributed environment

When setting up a distributed process across a network using CUBE Cluster, you must ensure that all read/write permissions are properly set across the machines in the cluster.

Bentley recommends mapping the model folder to a shared drive letter and setting all file paths in the model application relative to this drive location. Set the working directories of the CUBE Voyager instances running in wait mode on each cluster node to this common drive location. With this configuration, all the file I/O references in the scripts that run from any of the node processors will correctly reference the model folder. The node machines and the main control machine must have read/write permission to the mapped model folder.

For machines configured with multiple user accounts that have different permission settings, users logged in without full administrator privileges on the main or node machines can cause access problems. Users logged in without full privileges usually do not have full read and write permissions to folders created on the same machine but with a different login ID unless permissions are explicitly given.

Limitations with Random Number Generation

If a distributed model uses random numbers, each cluster node will have its own random number generator, and thus its own random series. This is because the nodes run in separate processes.

Suppose the model’s random seed starts at zone 1 with:

IF(i==1)X=RANDSEED(12345)

If this model is distributed, the final results will be different than the standalone case, for the cluster nodes not using zone 1 will use different seeds.

However, each node’s random numbers could be explicitly spawned from the same seed (or, the necessary random series could be otherwise generated ahead of time). For example:

X=RANDSEED(12345)

All cluster nodes executing this command will use the same random series. This potentially defeats the purpose of using random number generation to begin with.

Bentley recommends against using Cluster with models with random generation, unless absolutely necessary.

When using Cluster with highway, note that:

-

If a distributed model computes link work (LW) arrays in the ILOOP phase, the results will be incorrect outside of the current I zone. This is because each distributed node will have its own copy of the arrays and they are not combined at the end of the iteration. Cluster requires link work arrays to be computed in the ADJUST phase, and used in the ILOOP phase. This is the standard usage. The only exception is when the array is computed and used within the same I zone only.

-

Use of Cluster can have a very small effect on volumes generated by the HIGHWAY program. During the ADJUST phase, when iteration volumes are combined, the final assigned volumes might vary slightly over different numbers of cluster nodes.

Please see the PARAMETERSCOMBINE EQUI sub-keyword, which controls the Standard equilibrium processing in Highway. When ENHANCE is set to 2 (Bi-conjugate Frank-Wolfe algorithm), the effect is most noticeable. The differences are less pronounced with ENHANCE = 1 (Conjugate Frank-Wolfe algorithm), and extremely minimal with ENHANCE = 0 (standard Frank-Wolfe algorithm, the default for EQUI).

The difference in all cases is negligible, and an unavoidable aspect of floating point arithmetic when distributed over cluster nodes. If this is an issue for your application, Bentley recommends standardizing the number of cores for the model.

Intrastep distributed processing (IDP)

To implement IDP, add the following statement in the appropriate Matrix or Highway script (within the Run/EndRun block):

DistributeINTRASTEP ProcessID='TestDist', ProcessList=1-4, MinGroupSize=20, SavePrn=T

This statement will invoke intrastep distributed processing in the program unless the global switch is off. Before running the job in the main computer, all the sub-process computers participating in the DP must be started and in the wait mode with the correct file name to wait for. The ProcessID and the process numbers in the ProcessList are used to make up the name of the wait file. See Cluster Utilities for tools and examples of how to start multiple instances of CUBE Voyager in WAIT mode with the appropriate settings prior to starting a distributed run.

The ProcessID is the prefix for the file names used to communicate with the sub-processes. ProcessList is a list of sub-processes to use for DP. It is a list of numbers and put in as individual numbers or ranges (for example, ProcessList=1,5,10-20,25). Each sub-process must be assigned a unique process number.

MinGroupSize is the minimum distributed zone group size. If there are more sub-processes than there are zone groups of this size, then some sub-processes will not be used. For example, if there are 100 zones and MinGroupSize is 20 and ProcessList=1-10, only 4 sub-processes will be used to process 20 zones each and the main process will process 20 zones itself.

SavePrn is a switch to control if the sub-process print files should be saved or not. The default is F (false) for this keyword. The IDP process automatically merges the script generated information (from Print statements etc.) and error messages from sub-process print files into the main print file so there is little reason to save the sub-process print files except for debugging purposes.

With the example of ProcessID=’TestDist’ and ProcessList=1-4 above, four sub-processes will be used. The script files that each of the sub-process is looking for is {ProcessID}{Process#}.script. So in this example, the 4 sub-process will start with the following script file to look for:

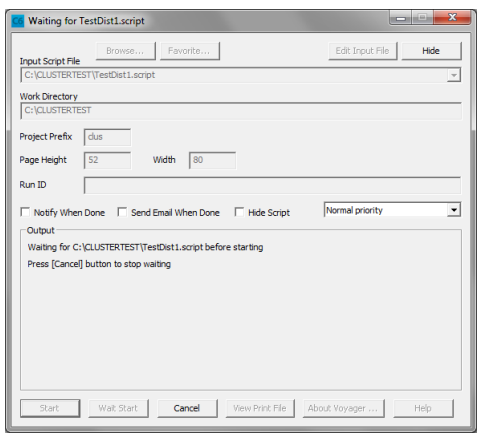

CUBE Voyager must be started on each of the processor nodes for each of the sub-processes and the above script names and common working directory set and then press the Wait Start button to go into wait mode. CUBE Voyager can also be started with command line parameters:

VoyagerTestDist1.script/wait

This will put CUBE Voyager in the wait mode automatically. If the current directory is the work directory, then no drive/path is needed when specifying the script file name, otherwise, full path name should be used when specifying the script file.

If the processor nodes of your cluster are separate single processor computers connected via a local network then you will need to start an instance of CUBE Voyager on each of the computers in your cluster and set the appropriate script name for that node. Each processor node in the cluster should correspond to one of your process numbers set with the ProcessList= keyword. For example, on the first computer in the cluster, CUBE Voyager would be launched and placed into wait mode after setting the Input Job File and the common working directory. Note that the common working directory when using networked processing nodes must be mapped on all processing nodes to the same physical location which is the working directory. If the processing nodes are all nodes on a common multiprocessor machine then multiple instances of CUBE Voyager can be started and put into wait mode directly on the multiprocessor machine and the working directory can simply be the model folder on the multiprocessor machine. Note also that both these conditions can apply. A computer cluster for distributed processing could be a mixture of a multiprocessor machine with several processing nodes connected to additional single or multiprocessor machines across a local area network. See Cluster Utilities for additional information on getting multiple instances of CUBE Voyager open and configured in wait mode.

When all the sub-processes are in wait mode, start the CUBE Voyager run like a normal run in the main computer.

Multistep distributed processing (MDP)

To implement MDP, add the following statement in the CLUSTER script at the beginning of the distributed script block:

DistributeMULTISTEPProcessID='TestDist',ProcessNum=5

Also, add the following statement in the CLUSTER script at the end of the distributed script block:

EndDistributeMULTISTEP

This statement will invoke MDP in Pilot unless the global switch is off. Before running the job in the main computer, the sub-process node participating in this MDP must be started and in the wait mode with the correct file to wait for. See Cluster Utilities for additional information on getting multiple instances of CUBE Voyager open and configured in wait mode. The ProcessID and the ProcessNum number are used to make up the name of the wait file. ProcessID is the same as defined above for IDP. ProcessNum is a single process number since the steps are distributed to one process only.

When a block of operations is distributed to another processing node, the main computer will continue running the script without waiting for the sub-process on this other processing node to finish. It is the user’s responsibility to check for sub-process completion before using output files generated by the sub-process. When a sub-process is done, it will create a file named {ProcessID}{Process#}.script.end. Use the Wait4Files command to wait for the .end file to be created in Pilot. For example:

Wait4Files Files=TestDist1.script.end, TestDist2.script.end, TestDist3.script.end, CheckReturnCode=T, UpdateVars=vname,Matrix.xname, PrintFiles=Merge, DelDistribFiles=T

Alternately, you may represent Files as a comma-separated, non- spaced list of files in a Pilot variable:

MYFILES=’TestDist1.script.end,TestDist2.script.end,TestDist3.script.end’ Wait4Files Files=@MYFILES@, CheckReturnCode=T, UpdateVars=vname,Matrix.xname, PrintFiles=Merge, DelDistribFiles=T

The Files keyword specifies a list of all the files to wait for and the CheckReturnCode keyword specifies if the return codes from the sub-processes should be checked. When true, the whole run will stop if a sub-process returns with a code 2 (fatal) or higher.

The UpdateVars keyword specifies a list of global variables computed in Pilot and logged from individual programs that should be merged back from the sub-process run. Any variables with the first part of the name matching an UpdateVars name will be merged back. For example, for UpdateVars=vname,Matrix.xname, variable vname1,vnameabc,Matrix.xnamevar etc. will all be merged back to the main process. Care must be taken to merge back ONLY the variables that need to be returned to the main process. Consider the following example:

ThisVar=1 DistributeMULTISTEP ProcessID='TestDist', ProcessNum=5 Run pgm=Matrix ... EndRun Run pgm=Network ... EndRun EndDistributeMULTISTEP ThisVar=2 Wait4Files Files=TestDist5script.end, CheckReturnCode=T, UpdateVars=ThisVar, PrintFiles=MERGE, DelDistribFiles=F

During execution, after the Wait4Files statement, the variable ThisVar will have the value of 1. This is because the subprocess inherits a copy of all the global variables to start with (ThisVar=1), so even though the subprocess never modifies the value of ThisVar, the update process will change ThisVar back to 1. The setting of ThisVar to 2 in the main process will be overwritten in this case.

The PrintFiles keyword controls the disposition of the print files from the sub-processes. It can be "MERGE," "MERGESAVE," "DELETE," or "SAVE." MERGE means the print files will be merged back into the main print file then deleted. MERGESAVE means to merge the files but not delete them. DELETE means no merge but delete them and SAVE means no merge but save them. The default is SAVE.

The DelDistribFiles keyword controls the disposition of the MDP temporary communication files. The default is true, meaning to remove all temporary files.

Examples

Intrastep distributed processing:

Run pgm=Matrix FileI MatI=... FileO MatO=... DistributeINTRASTEP ProcessID='TestDist',ProcessList=1-4 ... EndRun

Multistep distributed processing:

DistributeMULTISTEP ProcessID='TestDist', ProcessNum=5 ; the following 3 steps will be distributed to another processing node Run pgm=Matrix ... EndRun Run pgm=Network ... EndRun Run pgm=Highway ... EndRun EndDistributeMULTISTEP ; run the following 2 steps while the sub-process is doing the 3 steps above Run pgm=Public Transport ... EndRun Run pgm=Fratar ... EndRun ; wait for the sub-process to finish before continuing Wait4Files Files=TestDist5.script.end, CheckReturnCode=T ; continue running on successful end of the sub-process 5 Run pgm=Network ...

Using both Intra and Multi Step Distribution with 12 processing nodes (main, GroupA 1-4, GroupB 1-4, GroupC 2-4) to do AM, PM and Off-Peak assignments in parallel:

DistributeMULTISTEP ProcessID='GroupA', ProcessNum=1 ; the following 2 steps will be distributed to sub-process GroupA1 Run pgm=Matrix DistributeINTRASTEP ProcessID='GroupA',ProcessList=2-4 ... EndRun Run pgm=Highway DistributeINTRASTEP ProcessID='GroupA',ProcessList=2-4 ... EndRun EndDistributeMULTISTEP DistributeMULTISTEP ProcessID='GroupB', ProcessNum=1 ; the following 2 steps will be distributed to sub-process GroupB1 Run pgm=Matrix DistributeINTRASTEP ProcessID='GroupB',ProcessList=2-4 ... EndRun Run pgm=Highway DistributeINTRASTEP ProcessID='GroupB',ProcessList=2-4 ... EndRun EndDistributeMULTISTEP ; run the following 2 steps while the sub-processes are running Run pgm=Matrix DistributeINTRASTEP ProcessID='GroupC',ProcessList=2-4 ... EndRun Run pgm=Highway DistributeINTRASTEP ProcessID='GroupC',ProcessList=2-4 ... EndRun ; wait for all the sub-processes to finish before continuing Wait4Files Files=GroupA1.script.end, GroupB1.script.end,CheckReturnCode=T Run pgm=Network ...

Procedures that disable intrastep distributed processing

In addition to the requirement of independent zone processing in IDP, the following commands/options will cause IDP to turn off automatically due to data storage, calculation or input/output requirements that would overtake any benefits that IDP would provide:

The following commands work in IDP mode but their behavior may be different in IDP:

-

ABORT — The main process and any subprocess that encountered this command will abort that process but other processes will continue to execute until the end. The main process will then abort the run.

-

EXIT — The main process and any subprocess that encountered this command will stop the current ILOOP phase for that process but other processes will continue to execute until the end of the ILOOP phase.

-

ARRAY/SORT — Each process has its own arrays so the arrays will have to be filled in and sort on each process.

-

IF (I=1) and IF (I=Zones) type statements to perform certain calculation/initialization/summary processing only once per ILOOP phase will not work because not all processes will process zone 1 and the last zone. Change the checks to use the following 3 new system variables to determine if it is the first zone processed, the last zone processed and what is the current process number:

-

FIRSTZONE — The first zone processed in the current processor. It will be 1 for a normal (non-DP) run and will be the first zone to process in a DP run or a run with the SELECTI keyword. For example, ‘IF (I=FirstZone)’ to perform initialization on the first zone processed.

-

LASTZONE — The last zone processed in the current processor. It will be "zones" in a normal run and will the last zone to process in a DP run or a run with the SELECTI keyword. For example, "IF (I=LastZone)" to perform finalization on the last zone processed.

-

THISPROCESS — The current process number. It will be -1 for a normal run, 0 for the DP main controller, and the process number in a DP sub-process. With this a script can tell if it is running in non-DP mode (ThisProcess = -1), it is running as the main (ThisProcess = 0), or it is running as a node (ThisProcess > 0).

Using IDP for steps that summarize zonal values

In general, any step that summarizes zonal values may not use intrastep DP because zones are processed in independent processes. However, under certain circumstances, a step may be restructured to utilize IDP. For example, if a step summarize occupied single family dwelling units (SFDU) by zone type and writes out a RECO file with a record for each zone type:

; original script, sum occ. SFDU by ZoneType run pgm=matrix zdati=lu.dbf, z=zone ; has fields ZoneType and SFDU reco=lusum.dbf, fields=ZoneType,SFDU array SFbyType=9,SFOcc=9 zones=3000 if (i = 1) ; initial occupancy table SFOcc[1]=0.91 SFOcc[2]=0.92 SFOcc[3]=0.93 SFOcc[4]=0.94 SFOcc[5]=0.95 SFOcc[6]=0.96 SFOcc[7]=0.97 SFOcc[8]=0.98 SFOcc[9]=0.99 endif SFbyType[zi.1.ZoneType]=SFbyType[zi.1.ZoneType]+zi.1.SFDU*SFOcc[zi.1.ZoneType] if (i = zones) ; write RECO at last zone loop zt=1,9 ro.ZoneType=zt ro.SFDU=SFbyType[zt] write reco=1 endloop endif endrun

This step can be restructured into two steps, one to get the summary for each Cluster node and a second step to combine the multiple data records for each zone type into a single record for each zone type:

; Cluster script run pgm=matrix DISTRIBUTEINTRASTEP PROCESSID='TESTDIST', PROCESSLIST=1-3 zdati=lu.dbf, z=zone ; has fields ZoneType and SFDU ; write to temp reco file, use more precision on SFDU field reco=tlusum.dbf, fields=ZoneType(3.0),SFDU(13.5) array SFbyType=9,SFOcc=9 zones=3000 if (i = FirstZone) ; can not use if (i=1) SFOcc[1]=0.91 SFOcc[2]=0.92 SFOcc[3]=0.93 SFOcc[4]=0.94 SFOcc[5]=0.95 SFOcc[6]=0.96 SFOcc[7]=0.97 SFOcc[8]=0.98 SFOcc[9]=0.99 endif SFbyType[zi.1.ZoneType]=SFbyType[zi.1.ZoneType]+zi.1.SFDU*SFOcc[zi.1.ZoneType] if (i = LastZone) loop zt=1,9 ro.ZoneType=zt ro.SFDU=SFbyType[zt] write reco=1 endloop endif endrun ; extra step to combine RECO back to one record per ZoneType run pgm=matrix zdati=tlusum.dbf, z=ZoneType,sum=SFDU reco=lusum.dbf, fields=ZoneType(3.0),SFDU(10.2) zones=9 ; write out the combined dbf record in the first zone processed loop zt=1,zones ro.ZoneType = zt ro.SFDU = zi.1.SFDU[zt] write reco=1 endloop exit endrun